Tech media Tom’s Hardware published a blog post reporting that in its latest paper, Nvidia detailed a new AI decoding method called TiDAR, which ingeniously combines two model mechanisms: Autoregressive and Diffusion. Accelerate text generation by leveraging the “idle slots” of the GPU.

Autoregressive is a generation method where AI must guess the next character based on the previous one, just like a chain of characters, it can only generate one character after another in sequence.

Diffusion is a technique often used in AI painting, generating content by gradually removing noise. In TiDAR, it is employed to “guess” several possible words at once for subsequent screening.

IT Home quoted a blog post as saying that current language models typically generate one Token (word) at a time. This mechanism of generating one by one leads to extremely high computational costs and delays.

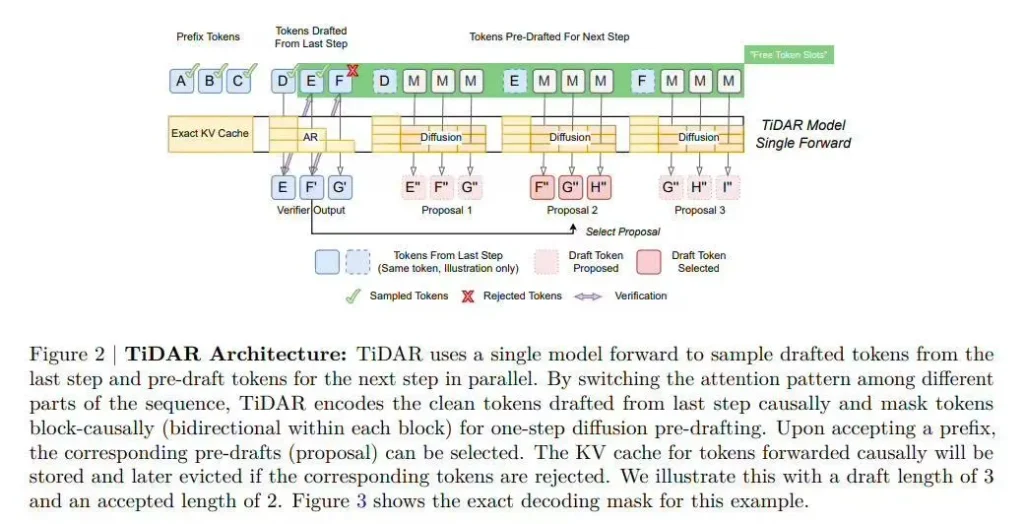

The core concept of TiDAR lies in leveraging the “idle slots” that are not utilized during the model inference process to generate multiple tokens in a single step without sacrificing generation quality, thereby significantly enhancing response speed and reducing GPU runtime.

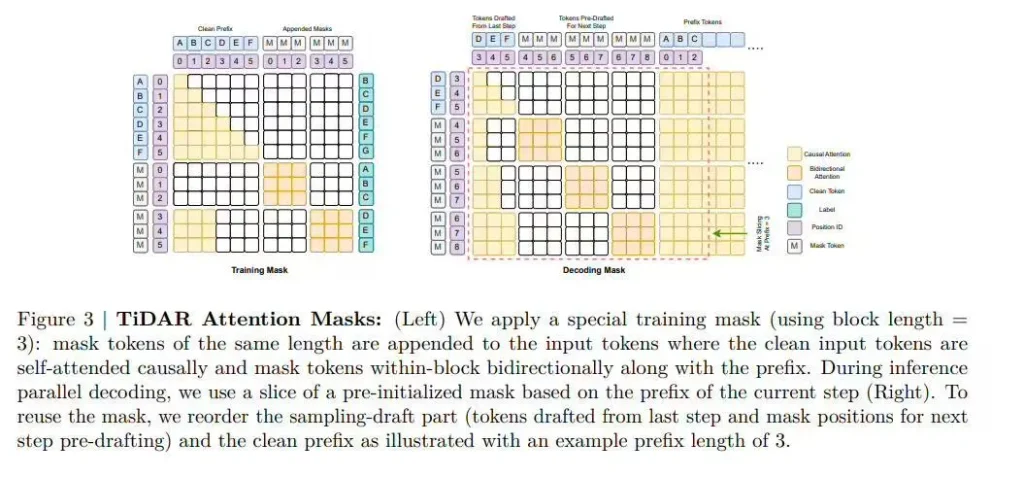

In terms of technical principles, TiDAR innovatively trains a single Transformer model to perform two tasks simultaneously: standard autoregressive “next word prediction” and diffusion-based “parallel drafting”.

Unlike the previous Speculative Decoding that relied on independent draft models, TiDAR divides the input into three regions through structured Attention masks: the prefix region, the validation region, and the drafting region.

Speculative decoding is an acceleration technology that first uses a small model to quickly draft a passage, and then a large model checks and corrects it. TiDAR attempts to complete these two steps within the same model.

This design enables the model to draft new tokens in parallel using the diffusion head while also verifying these drafts through the autoregressive head. Most importantly, it ensures the structural effectiveness of the KV Cache (KV Cache) and resolves the deployment challenges faced by early diffusion decoders.

The research team conducted tests based on the Qwen series models. In benchmark tests such as HumanEval and GSM8K, the accuracy of TiDAR is on par with or even slightly improved by the benchmark models.

In terms of speed, the 1.5-billion-parameter version of the TiDAR model achieved a 4.71-fold increase in throughput. The 8-billion-parameter version performed even more impressively, with a throughput 5.91 times that of the Qwen3-8B benchmark. This indicates that at the current test scale, TiDAR can effectively utilize the GPU’s video memory bandwidth and generate more tokens without increasing additional video memory transfer.

The media pointed out that despite the impressive experimental data, TiDAR still faces the challenge of scale expansion at present. The tests in the paper are limited to small and medium-sized models with less than 8 billion parameters, and do not involve customized kernel-level optimizations (such as fused kernels), only using the standard PyTorch environment.

As the number of model parameters and the context window expand, the computational density may become saturated, thereby compressing the cost advantage of “multi-token extensions”. Researchers say that in the future, verification will be conducted on larger-scale models to determine whether the technology can become a practical alternative for large-scale AI deployment in the cloud.