Gemini 3.0 Pro has officially launched its Preview version on Google AI Studio, and its API has also been opened simultaneously. It will be gradually launched in various Google products in the future.

There is no superfluous nonsense. Open the Model Card, and all you can see are just two words: overwhelming.

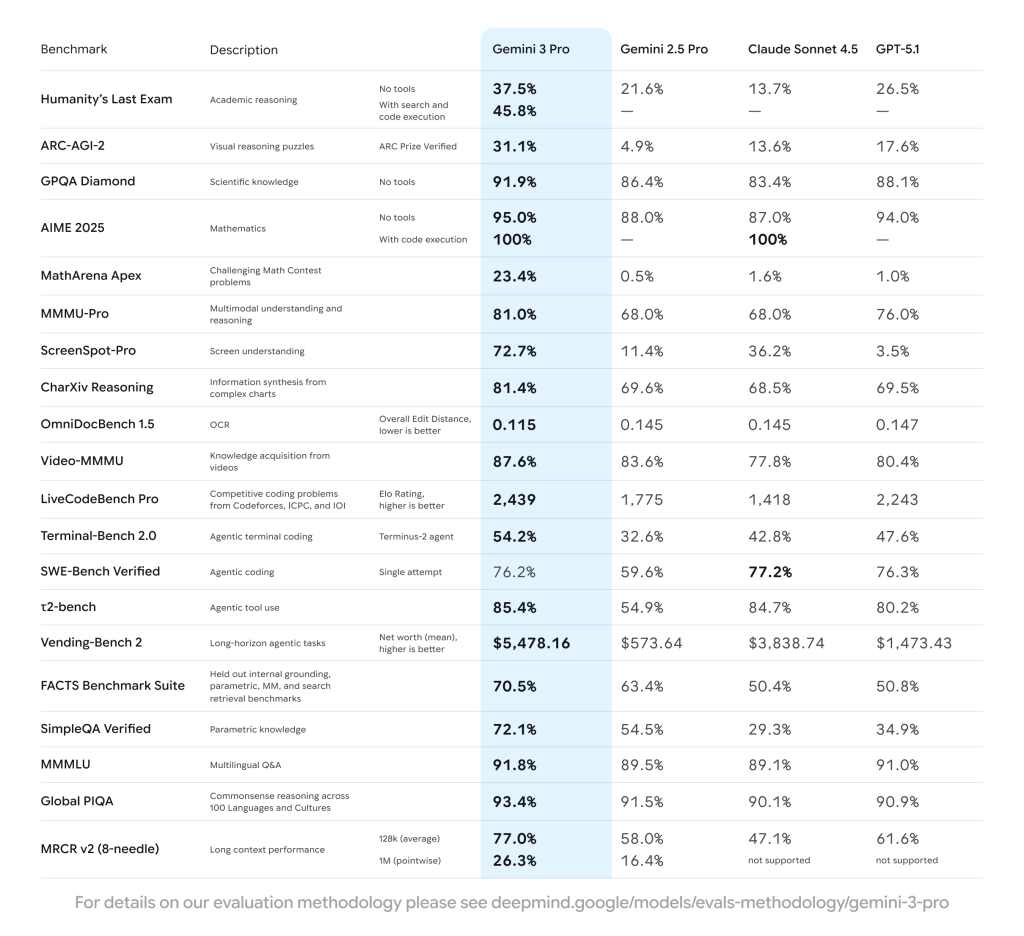

According to the test data disclosed by Google, Gemini 3 Pro has undoubtedly become the most powerful AI in mathematics on the Earth at present. In the AIME 2025 mathematics test, in conjunction with code execution, it directly achieved a full score of 100%. In the “hellish mode” of math competitions, MathArena, while other large models, including GPT-5.1, were still struggling around 1%, Gemini 3 Pro directly reached 23.4%.

In terms of programming ability, although I didn’t achieve SOTA on SWE-Bench, I’m definitely in the first echelon. The Elo score of Live Code Bench exceeds 2400 points, and it ranks first in the benchmark tests of tool invocation and terminal operation.

What’s truly astonishing is its “visual intelligence”. The ability to understand screenshots is as high as 72.7%, which is twice the current most advanced level. This means that Agents are no longer blind; they will completely reshape the way AI operates computers.

But that’s not all. Google threw out a little bombshell tonight: its own Agentic programming platform – Google Antigravity.

Previously, it was rumored online that Gemini 3 could achieve “end-to-end programming”, and everyone thought that the model had become sophisticated. But it seems that it’s not that the model has become sophisticated, but rather that Google is exploring how to achieve end-to-end programming with better systems engineering.

If Cursor is currently the most powerful “exoskeleton”, it enables you to write code faster through AI completion. That Antigravity is aimed at “autonomous driving”. It is no longer just an editor, but an Agent-first development environment. Integrating Gemini 3.0 and the Gemini 2.5 Computer Use model that can control the browser, its Agent can write code by itself, run tests on the terminal by itself, even open the browser by itself to verify the UI, and fix errors by itself when they are found.

No stories, just build up your muscles.

Google announced with this round of hardcore releases: The new king has arrived.

The violent aesthetics of topping the charts: It’s not just about wiping out intelligence, but also about the transformation of Agent capabilities

In the AI circle, people are accustomed to the slight advantages of models competing with each other, but the performance report released by Gemini 3 Pro can be said to be extremely dazzling.

According to the data disclosed by Model Card, Gemini 3 Pro has achieved all-round top rankings in key benchmarks such as reasoning, multimodal, and the use of Agent tools.

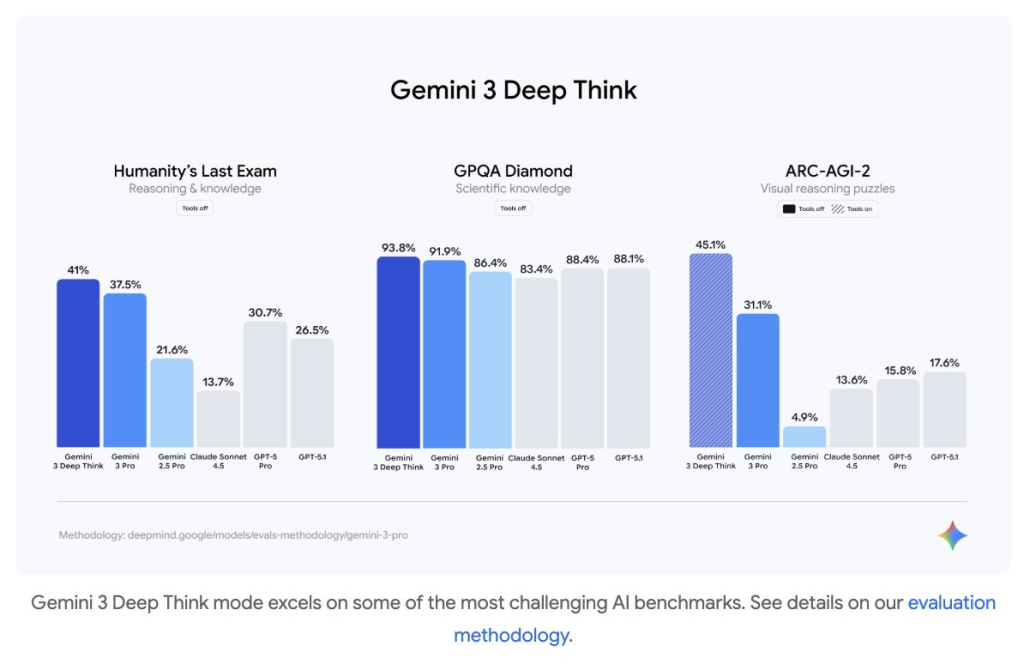

Let’s first take a look at the test that represents the “ceiling” of human intelligence – Humanity’s Last Exam. This is a yardstick for measuring the limit of academic reasoning. GPT-5.1 scored 26.5% in previous tests, while Claude Sonnet 4.5 scored only 13.7%. The Gemini 3 Pro directly achieved a high score of 37.5%. At the high-level reasoning level, this 10-percentage-point gap indicates that the model has already achieved a completely different depth of understanding when dealing with complex academic problems.

But this is not the limit yet. Google even hid Gemini 3 Deep Think (Deep Reasoning Mode), and without using any tools, its score on HLE soared further to 41.0%. It seems that the last bastion of humanity won’t last long either.

Its dominance can be seen in every field of mathematics and physics.

AIME 2025 (American Invitational Mathematics Competition) : Combined with Code Execution, the accuracy rate of Gemini 3 Pro reached an astonishing 100%. Yes, it’s a full score. Even in the “naked test” (tool-free mode), it still has an accuracy rate of 95.0% (compared with 94.0% for GPT-5.1 and 87.0% for Claude Sonnet 4.5).

MathArena Apex (Hell Mode of Math Competition) : While other large models, including GPT-5.1, were still struggling around 1%, Gemini 3 Pro directly reached 23.4%. This means that in many areas where AI couldn’t “understand the questions” at all before, Gemini 3 has already begun to solve them.

What is even more crucial is the improvement of the relevant capabilities of the Agent.

Gemini has always led in multimodal capabilities, and this generation has been specifically optimized for Screen Understanding. This is the key to whether the next generation of agents can truly take over human computers.

Look at the data in the “ScreenSpot-Pro” column:

GPT-5.1:3.5%

Gemini 3 Pro: 72.7%.

This is a nearly 20-fold power crush! This marks that Gemini 3 Pro is no longer a simple dialog box. It has true “visual intelligence” and is capable of understanding the complex operating system interface like a human.

In some traditional strengths, Gemini 3.0 still performs well – such as an ultra-large context window supporting 1M tokens, “native support” for multimodal data, long videos and multilingual processing, etc.

An interesting criterion was also posted by Google: On a simulated benchmark of making money by opening a store, Wending-Bench 2, Gemini 3 Pro ultimately earned a net worth of $5,478.16, while GPT-5.1 only earned $1,473.43.

However, regarding the previous online rumor of “completely ending the programming ability of programmers end-to-end”, Gemini 3.0 Pro is at the top of the AI field, but it has not “disrupted programming”.

In the SWE-Bench Verified test, which measures software engineering capabilities, Gemini 3 Pro scored 76.2%. Although strong, it did not surpass Claude Sonnet 4.5 (77.2%) to reach SOTA. This means that it still has limitations when dealing with ultra-long-range and extremely complex back-end logic.

This is also very reasonable. In the current situation where every large model is fully engaged in programming, it is indeed quite difficult for it to stand out in this field.

At present, Gemini’s capabilities are more inclined towards helping you restructure the entire back-end architecture. However, if you want to write a website with a strong modern design aesthetic, a 3D spaceship game, or generate complex SVG interactive animations, it can provide extremely stunning and directly runnable results with just one prompt.

Antigravity, exploration of Agentic programming

With the most powerful models and computing power, Google has begun to “flip the table” at the application layer. Tonight, Google threw out a “little trump card” – Google Antigravity.

Not long ago, the news was about model companies making efforts to acquire AI programming application companies. But this time, Google has so quickly released its own development platform

This is not merely a new IDE; it is an Agent-First (Agent first) development platform defined by Google. Here, developers upgrade from “code farmers” to “architects”, and Gemini 3 transforms into an “executive partner” with full permissions for the editor, terminal and browser.

To achieve this experience, Google even configured a “model army” in the background to work together:

Gemini 3: As the brain, it is responsible for advanced reasoning and code writing.

Gemini 2.5 Computer Use: As the hand and eye, specifically controlling the browser for UI verification and testing.

Nano Banana: As a graphic designer, responsible for generating images and UI materials. This closed-loop experience that connects the underlying model to the top-level interaction is undoubtedly a dimensional reduction strike for existing AI editors like Cursor.

The most interesting ability of Antigravity lies in parallelism. The official materials clearly state that developers can collaborate with multiple intelligent agents, and these agents can independently plan and execute complex end-to-end software tasks on your behalf simultaneously.

Imagine this workflow: You issue an instruction, and Antigravity instantly splits into multiple agents – Agent A is responsible for writing the back-end logic, Agent B is responsible for running test cases in the terminal, and Agent C directly opens the browser to verify the interaction effect of the front-end UI. They work in tandem, like a well-coordinated agile development team, and you only need to inspect the “artifacts” they submit.

Antigravity is a free platform. Currently, there are not many user experiences of Antigravity on the Internet, but they are mostly positive.

To achieve a replacement for Cursor itself is definitely not feasible – an end-to-end complex programming experience definitely requires a more mature model. But programming simple projects might be even simpler.

The entire family is making concerted efforts: TPU and search

In the second half of the development of large models, the competition is no longer about the sudden inspiration of a single algorithm, but rather about who has more redundant computing power, who has broader data, and who has more sustained investment. The victory of Gemini 3 Pro has one very special point: Gemini 3 Pro is trained using Google TPU.

While AI companies around the world are anxiously awaiting the delivery cycle of NVIDIA Gpus, Google is still sitting on its vast TPU mine. TPU is specifically designed for LLM training and features extremely high bandwidth memory (HBM), which enables it to handle a vast number of model parameters and extremely large Batch sizes with ease. It is precisely the computing power redundancy of the TPU that gives the Gemini 3 Pro the confidence to freely expand the parameter scale.

With computing power, we also need “fuel”. The training data of Gemini 3 Pro is all-dimensional coverage: it devours public network documents, code libraries, images, audio and video. More crucially, Google explicitly mentioned the use of User Data – of course, within the framework of the privacy agreement, user interaction data from Google’s vast product ecosystem.

Finally, this overflowing intelligence was injected into Google Search. Google has launched a brand-new AI Mode in Search this time. When you search for a complex concept (such as the working principle of RNA polymerase), Gemini 3 no longer throws you a bunch of cold links, but uses its powerful reasoning ability to generate on the fly an immersive interactive chart or simulation tool in real time.

From the TPU silicon-based dominance at the bottom layer, to the model intelligence at the middle layer, and then to the Antigravity development ecosystem and generative search at the top layer – what Google demonstrated this night was not merely a perfect model, but a seamless future that only giants can build.

Today, the performance of large models has already surpassed the boundaries of benchmark scores. Even the most advanced and complex cutting-edge benchmark tests are beginning to lose their measurement accuracy. How to scientifically quantify the subtle differences between models has become a specialized “quantitative science”. It is difficult to fully grasp all the mysteries merely through users’ simple hands-on experience.

The actual test cases are more often used to observe the aesthetic appeal of the model itself and the state of one-shot direct output.

Gemini 3.0 clearly has a high chance of winning in this update when it goes straight out.

As the direct output capability of models gets better and better, for developers, in the future, it will be more about whether your taste can outpace that of models and whether your ideas are distinctive enough.